当前位置:网站首页>Kaggle competition two Sigma connect: rental listing inquiries

Kaggle competition two Sigma connect: rental listing inquiries

2022-07-06 12:00:00 【Want to be a kite】

Kaggle competition , Website links :Two Sigma Connect: Rental Listing Inquiries

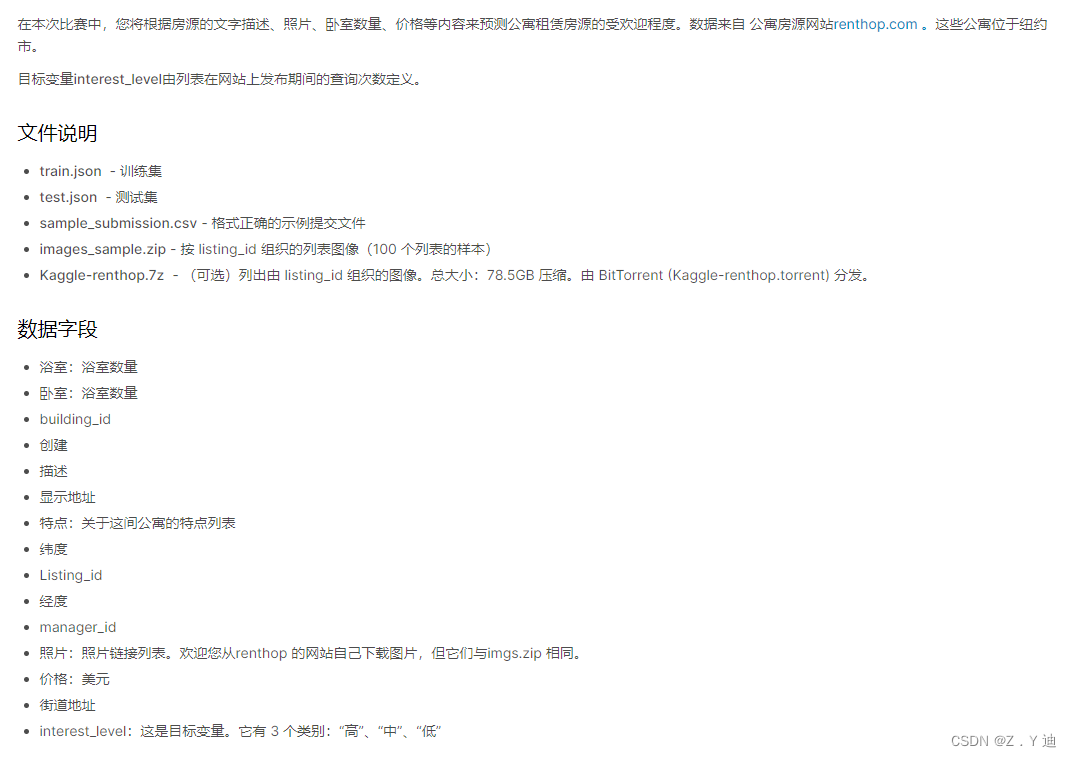

According to the data information on the rental website , Predict the popularity of the house .( This is a question of classification , Contains the following data , Variable with category 、 Integer variable 、 Text variable ).

Random forest model

Use sklearn Complete modeling and prediction . The data set can be downloaded from the official website of the competition .

import numpy as np

import pandas as pd

import zipfile # The official website data set is zip type , Use zipfile open

import os

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import log_loss

for dirname, _, filenames in os.walk(r'E:\Kaggle\Kaggle_dataset01\two_sigma'): # Change your path

for filename in filenames:

print(os.path.join(dirname, filename))

train_df = pd.read_json(zipfile.ZipFile(r'E:\Kaggle\Kaggle_dataset01\two_sigma\train.json.zip').open('train.json'))

test_df = pd.read_json(zipfile.ZipFile(r'E:\Kaggle\Kaggle_dataset01\two_sigma\test.json.zip').open('test.json'))

# Here is a customized data processing function .

def data_preprocessing(data):

data['created_year'] = pd.to_datetime(data['created']).dt.year

data['created_month'] = pd.to_datetime(data['created']).dt.month

data['created_day'] = pd.to_datetime(data['created']).dt.day

data['num_description_words'] = data['description'].apply(lambda x:len(x.split(' ')))

data['num_features'] = data['features'].apply(len)

data['num_photos'] = data['photos'].apply(len)

New_data = data[['created_year','created_month','created_day','num_description_words','num_features','num_photos','bathrooms','bedrooms','latitude','longitude','price']]

return New_data

train_x = data_preprocessing(train_df)

train_y = train_df['interest_level']

test_x = data_preprocessing(test_df)

X_train,X_val,y_train,y_val = train_test_split(train_x,train_y,test_size=0.33) # Data segmentation

clf = RandomForestClassifier(n_estimators=1000) # Random forest model

clf.fit(X_train,y_train)

y_val_pred = clf.predict_proba(X_val)

log_loss(y_val,y_val_pred)

y_test_predict = clf.predict_proba(test_x)

labels2idx = {

label:i for i,label in enumerate(clf.classes_)}

sub = pd.DataFrame()

sub['listing_id'] = df['listing_id']

for label in labels2idx.keys():

sub[label] = y[:,labels2idx[label]]

# Save the submission

#sub.to_csv('submission.csv',index=False) # Competition submission !

Run the above code , The effect of random forest is not very good . Some people will ask why there is no normalization preprocessing for data ? In fact, there is no need to normalize the data when using random forest , So I didn't do . If you want to do it , Try to verify it yourself . If you want to use random forest to improve the robustness of the model , Consider improving the feature engineering part , Get better features !

边栏推荐

- 数据库面试常问的一些概念

- 5G工作原理详解(解释&图解)

- Distribute wxWidgets application

- 第4阶段 Mysql数据库

- GNN的第一个简单案例:Cora分类

- arduino UNO R3的寄存器写法(1)-----引脚电平状态变化

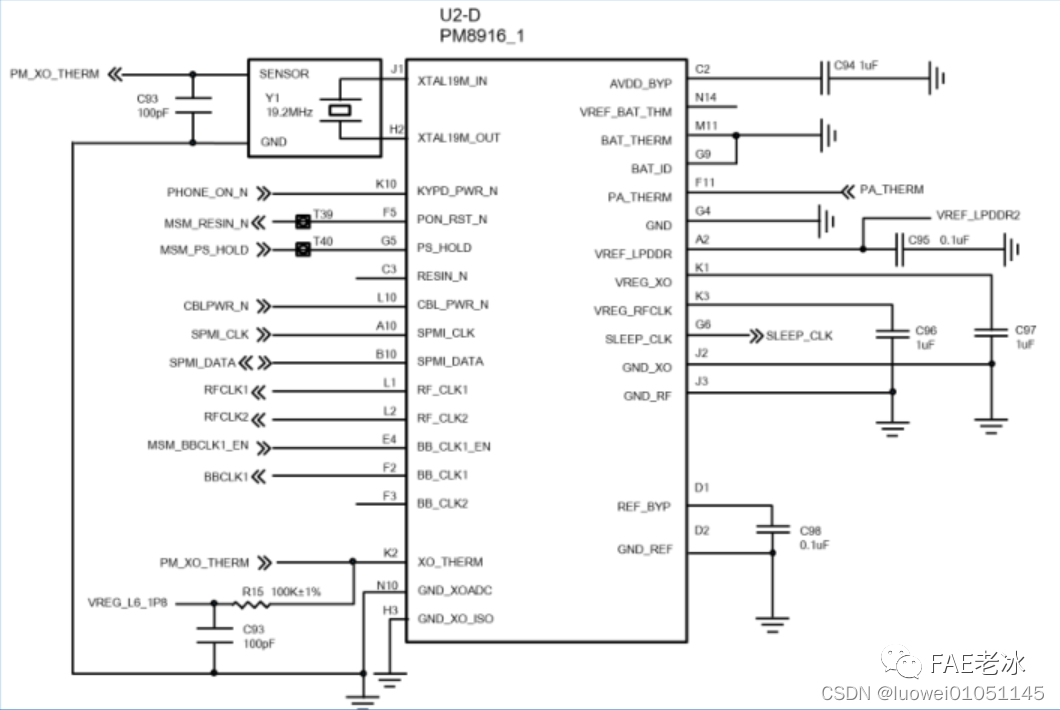

- Analysis of charging architecture of glory magic 3pro

- 【CDH】CDH/CDP 环境修改 cloudera manager默认端口7180

- Common regular expression collation

- [Presto] Presto parameter configuration optimization

猜你喜欢

随机推荐

STM32型号与Contex m对应关系

[template] KMP string matching

2019腾讯暑期实习生正式笔试

【yarn】Yarn container 日志清理

B tree and b+ tree of MySQL index implementation

There are three iPhone se 2022 models in the Eurasian Economic Commission database

2020 WANGDING cup_ Rosefinch formation_ Web_ nmap

[yarn] yarn container log cleaning

Hutool中那些常用的工具类和方法

Mall project -- day09 -- order module

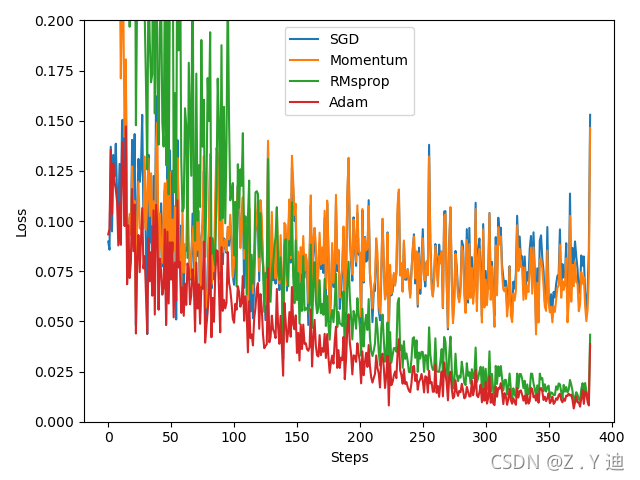

PyTorch四种常用优化器测试

锂电池基础知识

Vert. x: A simple login access demo (simple use of router)

高通&MTK&麒麟 手机平台USB3.0方案对比

Detailed explanation of 5g working principle (explanation & illustration)

Variable parameter principle of C language function: VA_ start、va_ Arg and VA_ end

Using LinkedHashMap to realize the caching of an LRU algorithm

【Flink】CDH/CDP Flink on Yarn 日志配置

Reno7 60W super flash charging architecture

STM32 如何定位导致发生 hard fault 的代码段

![Detailed explanation of Union [C language]](/img/d2/99f288b1705a3d072387cd2dde827c.jpg)

![[Flink] Flink learning](/img/2e/ff53e0795456e301f61da908c013af.png)

![[CDH] cdh5.16 configuring the setting of yarn task centralized allocation does not take effect](/img/e7/a0d4fc58429a0fd8c447891c848024.png)